Google’s new Allo messaging program, one of a handful of products and initiatives announced at this week’s I/O developer conference, has one neat gimmick: an artificial intelligence-driven response feature that analyzes a conversation and provides users with a selection of pre-written text replies. The software is meant to make texting easier — doing so, of course, by scanning and analyzing your entire history of texting and messaging within Allo, determining habits and patterns, and using visual-recognition software to predict how one might respond.

This predictive feature is becoming ubiquitous across Google’s products. Its newest email client, Inbox, features the same type of prepackaged responses and predictive text — generated, as in Allo, by extensively scanning and analyzing your emails. The company’s new Gboard keyboard for the iPhone similarly “remembers” what you type, and suggests words to you as you use it. (That data is — Google says — stored locally on your phone, and not sent to Google. Yet.)

Who is asking for this feature? “Another way Allo lets you express yourself is by typing less,” the developer demo-ing the app said. “A lot less.”

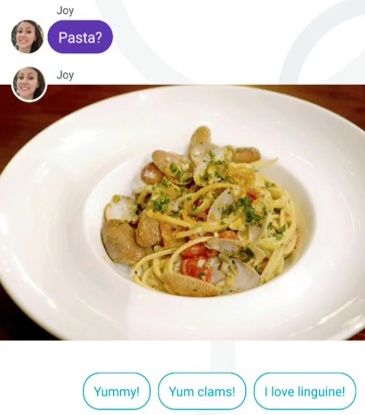

What? One-button responses make sense for quick acknowledgements — messages like “Okay” or “See you soon!” But do you really want to go back and forth with a contact the same way that you’d play an RPG? (“Yum clams!”) Apple briefly tried a less personalized approach to message prediction in an earlier version of iOS before quietly burying the feature. The feature has yet to overcome the slightly icky feeling that you’re letting a computer impersonate you. The line between computer as auxiliary helper and computer as an extension of the self is slowly but surely becoming blurred.

And, meanwhile, Google is collecting an enormous amount of information about you — about how you chat and email your friends — ostensibly for a feature whose use cases are fairly small in number. So what else is going on?

I don’t mean to imply that Google is up to something insidious, only that large tech companies rarely develop these complex machine-learning systems to suit a single purpose. At this point everyone knows (I hope) that Google makes its money not by selling its enormously useful and well-made products and services, but by selling to advertisers the data collected through the use of those services.

So what do you do when you’ve got the data and the neural networks necessary to — theoretically — imitate an individual person’s conversational patterns? It’s not difficult to imagine ad-targeting on an even more personalized level than it is now. Instead of selecting for specific search terms or demographics, advertising copy could itself be personalized for each user, using the tone and colloquialisms specific to them. Do you often say “y’all” or deploy the laugh-cry emoji? Maybe you’ll start seeing them more often in the ads around your Google experience.

Or maybe the brands will start talking to you directly in your own tone and manner — or that of your friends. The rise of social media, and the sudden ability of institutions to connect directly with millions of people, has had a consequent rise in advertiser obsession with brand “authenticity.” What this means in practice is that major consumer brands take to Twitter and Facebook express themselves in stilted millennial-speak: “Our products are bae, and also woke.” What happens when they can run a program to generate individually targeted “authentic” messages? Denny’s can DM you and sound eerily like your best friend. Or like you.

At this point, it’s just speculation: Google’s predictive tech isn’t even good enough to make it useful to most people, let alone advertisers; and it’s not clear that they have any plans at all to sell data like this to partners. But next time Google accurately guesses what you meant to type, you might wonder what else is going on in its enormous, distributed brain.