There are few tasks more Sisyphean in modern computing than comment moderation. For decades now, websites and social-media platforms have been tweaking comment sections in the hope of creating a space online where the chances of being called a “butthurt carelord” are vanishingly low. The problem they confront is that human moderation is slow and costly, especially at the scale most large companies are dealing with — but automated moderation is unable to handle the complicated nuances of language and meaning that are necessary to determine whether or not a comment should be heard.

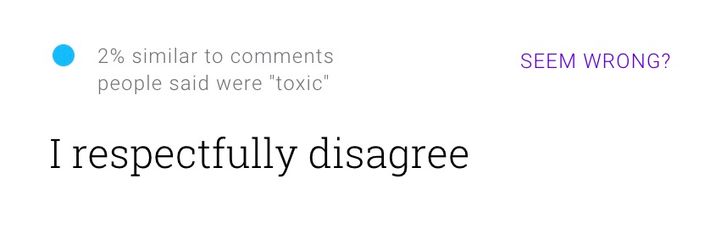

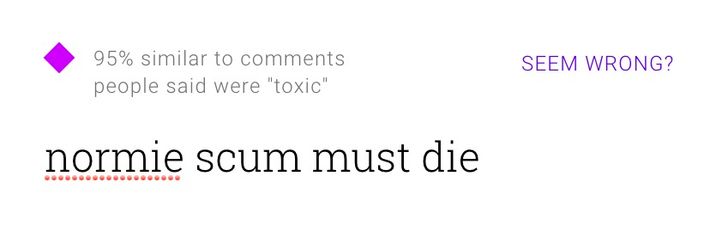

Now, Google hopes that it’s cracked the problem. Perspective, a new API from Google’s Jigsaw division, goes public today — launching with partners including Wikipedia and the New York Times, but available to any developer who wants to incorporate it into their website. (An API, or application programming interface, is a way for developers to access computational functions and services provided by outside parties. In this case, Google.) Perspective works by rating text comments on a “toxicity” scale from 0 to 100; that score is generated by comparing a comment’s text against a data set of hundreds of thousands of comments, which have already been cataloged by a team of thousands of human evaluators. Each comment was evaluated by ten people with diverse backgrounds.

One particularly interesting thing about Perspective is that it focuses on “toxicity,” defined as “likelihood that this comment will make someone leave the conversation.” In other words — rather than a blackballed list of words or phrases, or an attempt to come up with a set of core values to define “offensiveness” — the system focuses on ensuring that conversations can continue. Because “toxicity” operates on a sliding scale, different websites can determine their own thresholds. “What is done with that number is entirely up to publishers,” Jigsaw president Jared Cohen said. “We’re trying to basically use machine learning to put the power in the hands of publishers.”

Developers could filter out comments with high toxicity scores, or prevent them from even being submitted in the first place. In one demo I observed, an interactive slider let readers set their own threshold. In another implementation, comments were evaluated and scored as users typed, along with an icon indicating the shifting toxicity as characters were tapped out. Jigsaw cited research that says just reminding users that their message would be received by a human on the other end had a big effect on what they wrote. Perspective could be a kind of Clippy for the comments section: “It looks like you’re writing a hateful insult. Maybe don’t?”

As anyone who has approached this problem has learned, trying to determine what is and is not kosher varies broadly between communities and people. “One of the core values is to remain topic-neutral as well,” Jigsaw product manager C.J. Adams explained, “so it’s not what you’re talking about, but how it is said.” Saying something like “same-sex marriage is a sin” might be deeply hurtful in one forum, but find consensus in another. “We started with several different questions,” Adams told me. “Should we ask ‘is this a personal attack?’ or ‘is this offensive?’ and in the case of [the latter], there was much lower agreement between people. One hypothesis is that people might say ‘that is offensive, that offends me’ even if [the comment] is not stated disrespectfully.”

That’s why Google is anxious to be clear that Perspective is not a censorship filter prizing certain values over others. “We are not the arbiters of what is toxic and what isn’t,” Cohen made clear. Of course, Perspective may not be the arbiter for what “counts” as toxic, but it is the arbiter for the scale on which that judgment will be made — and this is where it’s most likely to run into trouble. “Topic-neutral” software is still making judgments about expression, and if there’s one thing people on the internet hate, it’s having their expressions judged. You could easily imagine an objection from people who don’t understand why comments directed at, for example, Nazis are obligated to be below a certain toxicity threshold. But Perspective, as a tool, solves this problem by decentralizing its power. It’s not just that thousands of people from different backgrounds are making judgments about the toxicity scale — rather than a small handful of omnipotent judges — it’s also that once it’s deployed, each site can control its own individual culture and tone. “You, the publisher, decide if that threshold is 99 or 9, if it’s 27 or 77,” Cohen said. “All of that power is put in the hands of the publisher, and what the publisher does with that score is entirely up to them.”

The system isn’t without flaws — the comment “shut up this song is sick!” generated a false positive, and the system seemed forgiving to numerous Donald Trump dog whistles that I plugged in — but Jigsaw’s hope is that Perspective gets smarter as time goes on. For the privacy-conscious, Google says that comments analyzed by Perspective (which need to be sent to the company’s servers) aren’t tied to any user identity; and if developers would prefer, they can request that comments sent through their implementation not be retained at all.

The big question, of course, is whether any of this will work, or if Perspective will just be another in a long line of failed attempts to pacify the comment sections of the web. What it has going for it is that it’s easy to understand and simple to integrate (Jigsaw described it as something one developer could deploy in an afternoon), but neural networks need information, and the more sites using it, the better it will become. Jigsaw’s launch partners are three news organizations (the Times, the Economist, and the Guardian) and Wikipedia. Social-media websites could probably incorporate the API — imagine a Perspective toxicity indicator next to, for instance, Twitter’s character counter, or a “maximum allowable toxicity” setting you could apply to your timeline — but whether Google’s competitors would even consider such a move is up in the air. And that doesn’t even cover Google’s own properties: YouTube, owned by Google and often cited as home to the web’s worst comments, has no plans to integrate Perspective right now. For now, you may have to remain accustomed to being called a “butthurt carelord.”