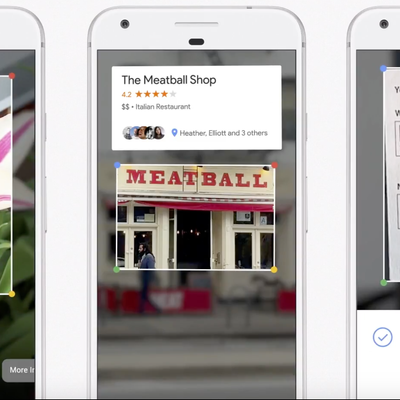

If you’ve ever stared at a flower and wondered exactly what it is — or have been outside a restaurant and wondered if it’s actually any good — Google’s new Lens tool is perfect for you. Announced today by Google CEO Sundar Pichai at Google’s I/O conference, and available soon for Google Assistant and Google Photos users, Google Lens promises to understand, and tell you, exactly what is in a given photo. Using computer learning, Pichai explained, Lens will be able to analyze your images and offer real-time information and suggestions.

As a demo, he showed the tool labeling a photo of a flower — good for allergy sufferers, Pichai joked. The feature offered potential types the flower might be and also recommended a nearby florist. Other capabilities demonstrated included a photo of a restaurant marquee taken from a New York street, which prompted Google Lens to offer reviews and ratings of that restaurant. Another example, this one greeted with the largest round of applause, involved taking a photo of the string of preset letters and numbers that come with a Wi-Fi router. Instead of crawling on the floor, shouting “S23HGKADF9723H” to the friend trying to connect to your home Wi-Fi — the password to which you never bothered to customize — Google Lens will automatically connect your device to the network, just by taking a photo of those numbers on the side of the router.

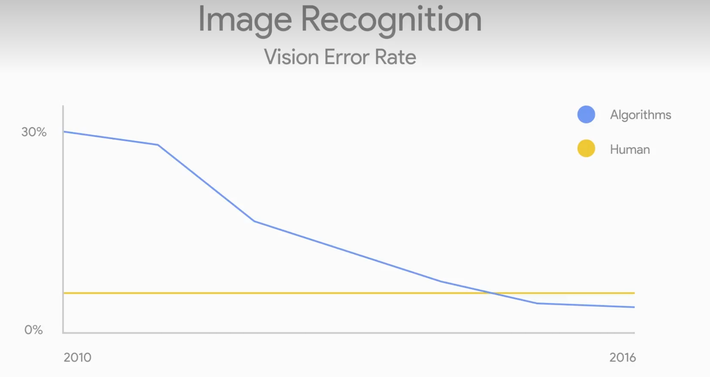

As for how well these machines actually learn and function, Pichai noted that over the past six years, Google’s image-recognition technology has improved markedly. So much so that it can now tell you what is in a given image with greater accuracy than a human being.