A new report from the United Nations is taking the tech industry to task on its lack of diversity, in part criticizing smart speakers and AI programs for being gendered as female and perpetuating gender stereotypes. The report finds a number of reasons for this, including the fact that women are not well-represented on the teams working on helper AI, and because gender stereotypes lead those teams to assume that female voices are more appealing to users.

In a statement, Saniye Gülser Corat, director of gender equality at UNESCO, said that the technology’s “hardwired subservience influences how people speak to female voices and models how women respond to requests and express themselves. To change course, we need to pay much closer attention to how, when and whether AI technologies are gendered and, crucially, who is gendering them.”

Tech companies are generally quick to point out that while their assistants are feminized, they are genderless automated programs. Still, names like Alexa, Siri, and Cortana all refer to female names or characters. The report highlights the difference between preference for a male voice (generally seen as authoritative) and a female voice (generally seen as helpful). The report says that “people’s preference for female voices, if this preference even exists, seems to have less to do with sound, tone, syntax and cadence, than an association with assistance.”

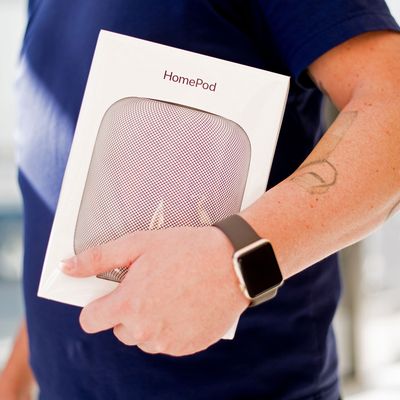

The result is that “constantly representing digital assistants as female gradually ‘hard-codes’ a connection between a woman’s voice and subservience.” All four of the leading voice assistants from Amazon, Google, Apple, and Microsoft launched with only female voices, and in almost all cases, the female voice is the default.

This seems like a difficult problem to solve, because imbuing these programs with humanlike speaking habits makes them feel more familiar and worth using, but it isn’t really. The problem is less that female voice assistants exist at all and more that for too long, they were the only option. To challenge the assumption that assistants should be female, tech companies can make simple changes to how their smart speakers and helpers work. For one thing, they could force users to select a preferred voice when they set up their assistant, instead of making the choice for them.

But we can probably stand to get weirder with it too. A video game called Rust comes to mind, in which the player’s in-game character is randomly assigned a race and gender when they begin (these factors have no influence over the player’s ability within the game). Like Rust, it might be cool if users were randomly assigned one of the various voice options an assistant offers from the jump. Or maybe the voices could be shuffled around, so that it’s not the same female assistant every time but a rotating array of helpful disembodied voices from across the spectrum.

Really, the best solution would be for all of these tech companies to invent a time machine and not launch with only female voices and then perpetuate the stereotype for years. But that one seems a little far-fetched.