The forecasters are fighting. Sometimes it’s a subtweet: “The blindingly obvious lesson of 2016 for election modelers is ‘it’s super easy to build an overconfident model, so think carefully about sources of uncertainty’ but sometimes people are endlessly creative in finding ways to avoid the obvious lessons.”

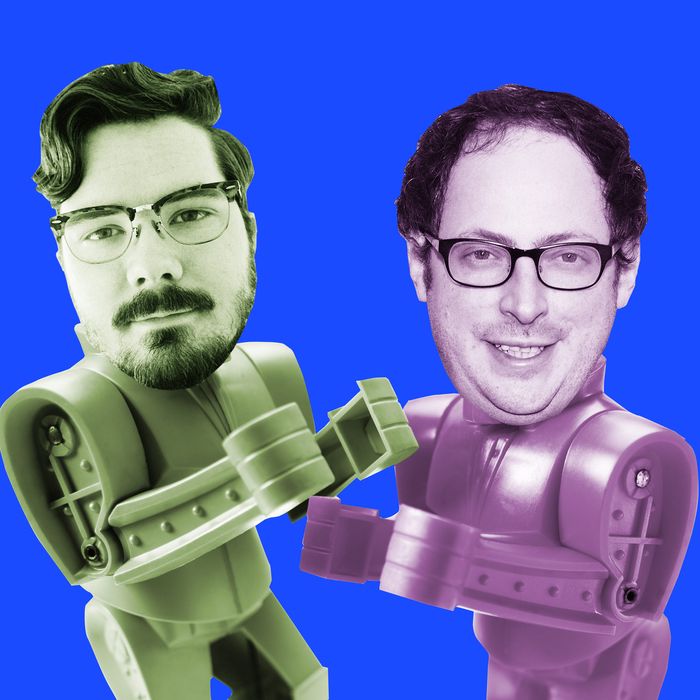

But for much of the summer and early fall, it was quite direct, to the point of Nate Silver, the forecasting supremo and FiveThirtyEight editor-in-chief, using the work of The Economist’s 24-year-old data journalist G. Elliott Morris and his colleagues as an example of the failures of the modern “data-science curriculum.” Silver had taken issue with Morris’s promotion of The Economist’s 2020 election model’s “record” on how it had worked in forecasting previous U.S. presidential elections. Silver, who would later release a model that was far less certain of an eventual Joe Biden victory, was not impressed with the fact that The Economist’s model, published for the first time by this team in 2020, had a good record of predicting elections that had already happened.

When FiveThirtyEight first published its forecast for the 2020 race, it put Biden’s chances to win at 71 percent, while The Economist set them at 87 percent. Today the two have gotten significantly closer: FiveThirtyEight has an 87 percent chance of a Biden win, and The Economist has 91 percent. But while this may make it seem as though the difference isn’t that great — they both round to Biden having a nine-out-of-ten shot — the distance they traveled to get there illuminates the defining question of a race in which one candidate has had a sizable, steady lead virtually the whole time. How likely, especially considering [waves hands] all that’s going on right now, are such leads to remain, and how do we think about the impact of things that are fundamentally difficult to anticipate and may not be in the data we look back on in order to project forward?

“To put it another way, the easy part of forecasting is fitting a model and the hard part is knowing when the best fit on past data will yield a worse forecast on unknown data,” Silver tweeted.

Silver’s argument has been that Morris and the Economist have been far too certain far too early in the race due to overconfidence in their ability to discern from past elections what was likely to happen in this one. And Silver has earned some right to talk about what’s come before; he is virtually the only forecaster left standing after the Pollingdämmerung that was 2016, an election that occurred when Morris was still a junior at the University of Texas.

After an election that was almost universally called incorrectly because of substantial polling errors in several key states (but less wrong by Silver than virtually any other forecaster), the question of how to handle uncertainty is now, for some, the defining question of forecasting — the art of crunching polling and other data through a set of rules, equations, and simulations to produce a prediction of how people will vote in November in the months and days leading up to the election.

But what if the defining feature of the election isn’t how much we don’t know — thanks to the coronavirus, thanks to voting shenanigans, thanks to an unrelenting onslaught of weird news — but how much we do know: The economy isn’t doing great, President Trump is unpopular, and Biden has been leading in the polls since they began.

“There’s something about referendum elections statistically,” Morris told me, “that are easier to predict.”

There aren’t as many forecasters these days, or at least not as many to pay attention to. In 2016, the New York Times tracked five forecasters that used some kind of method of collecting polls to produce a forecast (a percentage chance of a win) for the race: the New York Times itself, Nate Silver’s FiveThirtyEight, Daily Kos, The Huffington Post, and neuroscientist Sam Wang’s Princeton Electoral Consortium. The odds ranged from Silver’s 29 percent chance for Trump to 15 percent from the Times, 8 percent from Daily Kos, 2 percent from The Huffington Post, and virtually zero percent from Wang. In the current cycle, Daily Kos and HuffPost are out of the presidential-election game entirely, and the New York Times has turned itself into a formidable polling operation, while Wang ate a bug on CNN.

But these high-profile projects were hardly the only formalized election predictions. Election forecasting, especially for the public, outside of campaigns, has long attracted hobbyists and amateurs, as Silver once was. In 2016, one of those was Morris, who produced a forecast for his blog, The Crosstab.

While Morris may not have had a large audience back then, he still felt, like many of his more prominent colleagues, as if he’d been forced to explain why he had Hillary Clinton at merely an 85 percent chance to win. Trump’s victory, Morris wrote, was “surprising,” but “it was always possible — and when her blue firewall looked weak, that possibility increased dramatically.”

Morris was writing authoritatively and fluently, already taking on the we pronoun, analogizing unlikely outcomes to field-goal attempts, and sticking up for the ability of “‘fundamental’ indicators and Bayesian (fancy) analysis” to forecast election results despite Trump’s win.

“I just did this stuff a lot, day in and day out when I was in school,” Morris said. “In part, because I was really academically interested in how successful or how unsuccessful polls could be, and I shared the work on social media and Twitter quite frequently.”

He could be found in the mentions of prominent journalists and election analysts explaining that just because Clinton’s vote-share projections had been off didn’t mean all of polling had to be thrown out the window.

A few months later, he would be recognized by Mother Jones and then FiveThirtyEight itself for his work in forecasting the French presidential election — an exercise Morris described as a test of “do polls still work after … 2016, and it turns out they do work” — after which The Economist’s data editor, Dan Rosenheck, got in touch with him and he graduated from university. “To be blogging about these things for fun,” Morris said, “and get some pretty prominent people in the world that I was paying attention to back then following from the outside talking about it was neat.”

At the time, Morris said, he was “religiously” following Silver and Harry Enten, then both of FiveThirtyEight, and he was soon having wonky exchanges with Enten about how he calculated standard error in his polls. This hasn’t changed — a few days after I spoke to Dave Wasserman of NBC and The Cook Political Report, he reached out to me again to say, “We’re having too much fun getting into nerd fights over fairly minor differences in interpretation of election data.”

But now when Morris said “we,” he’s referring to the team of Economist journalists and Columbia University researchers who work on the British magazine’s election forecast. And when he tweets, tens of thousands could tune in to watch him go at it for rounds and rounds with Silver himself — at least until the conversation ceased with a block.

Morris is now probably the only public-facing media forecaster who can lay claim to a territory left abandoned except for Silver’s FiveThirtyEight colossus. Forecasting has followed the rest of the media to Twitter, where everyone spends all their time and says they hate it.

“I hate Twitter,” Andrew Gelman, the Columbia professor and renowned statistician who works on the Economist model told me. “I’m sure that Elliott has a good reason for using it.”

“I think we can learn absolutely nothing from Twitter fights,” said Natalie Jackson, the director of research at the Public Religion Research Institute who co-helmed The Huffington Post’s forecasting model in 2016. “When you reach the point where you’re just arguing, and one person is not giving the answer to the question but instead is turning around and pointing to ‘Well, your model did this, where my model is great,’ it’s just really pointless and really frustrating to see.”

Silver declined to comment for this piece, citing his lack of interest “in talking about the ‘model wars’ with so much else going on in the country right now” and referring me to his public comments while also saying that “the Economist team routinely misunderstand and misrepresent important aspects of how the FiveThirtyEight model works, which is frustrating. But it’s exceptionally exasperating to deal with them, so I’m just not going to do it anymore.”

“It’s not like I have contempt for the guy. Game respect to game,” Morris told me.

At this point, I must admit to some trepidation. While some suspect Silver of inflating Trump’s chances of winning this time around, what I’m most worried about is writing my name into the book of journalistic infamy by expressing any skepticism about Silver’s skepticism — or lack thereof — in October or even November of a presidential-election year.

On October 29, 2012, Dylan Byers, then a Politico media reporter, asked, “Nate Silver: One-term celebrity?” before Silver correctly predicted the winner of all 50 states, gave Barack Obama a greater than 90 percent chance of winning, and brought FiveThirtyEight to ESPN and Disney.

Four years later, The Huffington Post’s Ryan Grim accused Silver of “unskewing polls … for Trump,” calling his relatively weak forecast for a Clinton win an “outlier … that is causing waves of panic among Democrats around the country and injecting Trump backers with hope that their guy might pull this thing off after all.” That story was published on November 5, and Wired was ready to dethrone Silver entirely on November 7, with Jeff Nesbit proclaiming that we should “forget Nate Silver” while declaring that Wang was the “new king of the presidential election data mountain.” Remember the bug?

Morris and his colleagues at The Economist have been bullish on Biden since unveiling their model this summer, putting him at an 85 percent chance or better to win since July. But why should we expect things to move around a lot? There are fewer swing voters and thus less volatility in the polls.

“Just go to our website. It’s been 53 percent and 54 percent [for Biden] for the entire election,” Morris said. “I think that has to tell you something about the fundamental predictability of voter behavior in the year of our Lord 2020.”

Looking at the top line of Silver’s forecast at FiveThirtyEight, you would see something similar: a narrow popular-vote range for Biden above 50 percent, along with a climbing, but lower, probability of victory that started at the relatively measly 71 percent chance of winning (remember that number?) in August.

One data analyst I spoke to, Jonathan Robinson of Catalist, the Democratic data firm, thought that much of the difference between the two forecasters might not be due to deep philosophical divides about how to predict an electoral-vote total in August of an election year. Instead, they just seem to have different reads on how well Biden is doing relative to Trump.

Robinson looked at Silver’s figures for how big a margin Biden would need in the popular vote to have an even chance of winning the election given Trump’s advantage in the Electoral College. A two-to-three-point win in the popular vote gave Biden a 46 percent chance of winning the election, while a three-to-four-point margin gave him 74 percent, and a four-to-five-point win put him at 89 percent to get the necessary electoral votes. These big jumps mean that Biden doing half a percentage point better than expected (and Trump half a percentage point worse) could lead to big differences in the likelihood of a Biden win. If The Economist had Trump at roughly one point better than Silver had him, either because of how the polls were averaged, different economic projections, or any number of choices made in their models, this could account for the divergence in odds.

But the weeks of sniping and sometimes substantive debate before and after Silver’s model came out largely centered on the question of uncertainty and whether there was something special about 2020. Morris described Silver’s projections in August of this year and in 2016 as “fundamentally irreconcilable,” saying it was “a bit humorous that you were surprised the model was bearish on Biden when you just added like 30 percent more uncertainty because your gut told you so. What else would have happened?” Silver was just as eager a participant — saying Morris and the economist were “completely irresponsible … not to think about the effect that COVID-19 will have … The job of a forecast is to reflect the real world, and COVID is [a] huge part of the real world today.”

The Economist’s model accounted for COVID by smoothing out how an economy that gyrates from steep recession to rapid recovery can affect the race. Silver, however, spun up an “uncertainty index,” which included a number of factors, some of which tamp down on uncertainty (polarization, the stability of polling averages) and some which amp it up (economic volatility and, most controversially, “the volume of major news, as measured by the number of full-width New York Times headlines in the past 500 days”).

The uncertainty index was part of the “national drift,” or “how much the overall national forecast could change between now and Election Day,” which represented, according to Silver, “the biggest reason Biden might not win despite currently enjoying a fairly large lead in the polls.” But as we get closer to Election Day, the national drift in Silver’s model decays, with the polls experiencing a “sharp increase in accuracy toward the end of an election.”

Jackson described the use of things like large Times headlines as “a little data-miney” and evidence that “they were looking for something that inserts uncertainty.” Progressive pollster Sean McElwee said that Silver’s search for uncertainty was “clearly an overreaction to 2016” but that he had “earned the right to experiment” with new factors like the New York Times headlines.

Wasserman looked at it the other way: “You see modelers trying to backfit their data over a past series of elections and use it to predict the next one. But an approach that works in one election does not work in the next, and that’s why modeling is so precarious. The insinuation is that he’s pumping something artificial into a model to hedge his bets. I’d flip the question on its head. Why are other modelers pumping certainty into their forecast?”

That Silver is being cast as the oracle of uncertainty and the skeptic of data qua data may be surprising to those who see him purely as the oracle of 2012, but it’s long been in keeping with his more considered views of forecasting. “Models … require judgement and expertise, and I’ve long railed against the idea that one should assume it’s obvious ‘what the data says,’” Silver tweeted in August, in defense of his controversial inclusion of girthy Times headlines.

I did most of the reporting for this story in the last week of September — that is, before the president, a few Republican senators, and several of Trump’s closest advisers and White House staffers became infected with COVID-19. The president’s disease and his trip to Walter Reed medical center for treatment prompted, yes, a full-width New York Times headline and seemed to be the exact type of thing that could throw the race for a loop.

The Biden campaign halted its negative ads, and Trump went into near silence (save for some short videos) for a weekend, his health uncertain. But now the president is back, tweeting as much as usual and flip-flopping on seemingly an hourly basis about whether he should negotiate a stimulus package with congressional Democrats. And that was on top of a debate widely considered disastrous for him.

But has so much really changed? You don’t see it much in the poll aggregates, while Biden’s odds of winning have ticked up.

“There are big events that happen all the time and get forgotten,” Robinson said. “Ruth Bader Ginsberg passed away on Rosh Hashanah. The president’s tax returns were released. Maybe this is so unprecedented that it adds more variance to the outcomes. We’ll have to wait and see.”

A few hours after Robinson and I spoke, Trump was on his way, via helicopter, to Walter Reed. Who could have predicted that?

Correction: Silver placed Trump’s chances at 29 percent in 2016.