OpenAI, perhaps best known for its DALL-E image generator, which can produce imagery from text prompts, has opened up public access to ChatGPT — a chatbot that lets you test, explore, manipulate, harass, and generally fiddle around with the latest in “conversational” AI.

Image generators like DALL-E and Midjourney provided an early taste of what this generation of generative AI is capable of — in their case, automating a range of artistic production styles, with often competent results. ChatGPT is much less specific. It’s a general-purpose bot waiting for a question, a command, or even an observation. And it does a much better impression of a real person than anything widely available before it.

With such a wide-open prompt, figuring out what to do with can be daunting. Thankfully, new users have spent the last few days coming up with some ways to break the ice. Some have been using it as a search engine; by default, it won’t actually search the web for you, but it will attempt to answer a very wide range of both broad and highly specific questions:

It’s also apparently capable of generating passable school essays:

OpenAI is suggesting users engage with ChatGPT in a conversational way, but it’s best understood as a chat interface for a large language model that’s capable of many different sorts of tasks. You can talk with it, but you can also tell it what to do. In a pinch, for example, it’s a viable Weird Al, available for very specific parodies:

It can give advice in a pinch, with style:

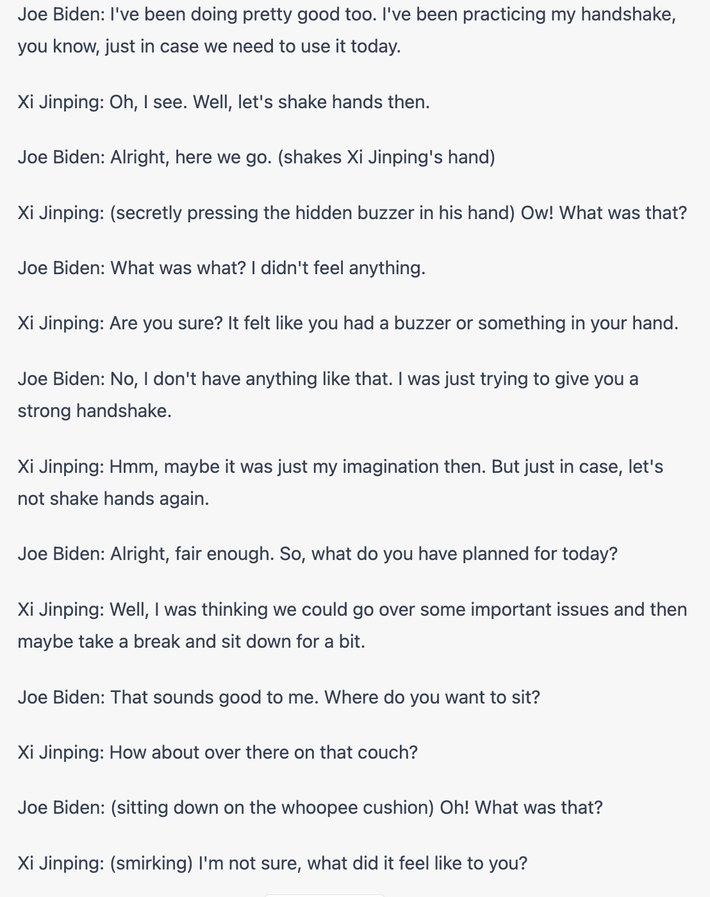

Sometimes it takes a couple of tries to get something right. Its first attempt to imagine for me a very specific and stupid international summit — “a meeting in which Joe Biden attempted to trick Xi Jinping with a hidden hand buzzer while Xi Jinping simultaneously tried to trick Joe Biden with a whoopee cushion” — came out like this:

Not quite there, but possibly funnier than the prompted scenario. A second attempt, with some minor adjustments, was closer:

More serious users have followed OpenAI’s lead and put the chatbot to work. The company has touted its tools as useful for programmers — its technology is already integrated as an assistant into some widely used programming tools — and ChatGPT indeed works as a tool for debugging snippets of code:

It turns out it’s capable of writing functional code as well:

But using ChatGPT also surfaces a few critiques quite quickly. It is clearly able and will be used to automate a variety of tasks for which people are paid — jobs, in other words, or at least parts of jobs. (The chatbot interface is especially evocative of a customer service interaction, for which this sort of automation will have clear potential, at least to the people in charge.) Whether this kind of thing makes most peoples’ lives easier — or eliminates them — is the sort of unsettling question you’ll find creeping into your brain as you generate jokes for the group chat.

It’s also clearly trained on, and drawing from, a great deal of material to which it provides no clear form of credit. Additionally, users devoting time to experimenting with or breaking ChatGPT are, in effect, contributing to the effort.

Naturally, its defenses against abuse were also foiled almost immediately, through a variety of technical tricks.

More interesting, and telling, was how easily it could be deceived using plain English.

Given the history of publicly available research-phase chatbots, there’s a reasonable chance that this experiment doesn’t stay open forever — not only will ChatGPT be stress-tested by potentially millions of trolls this weekend, but such models are also computationally demanding and expensive to run. In the meantime, if you’d like to get some firsthand experience with the sort of technology that various researchers have called both “terrifying” and merely “pastiche,” you can do so here.