Something has gone wrong with the internet. Even Mark Zuckerberg knows it. Testifying before Congress, the Facebook CEO ticked off a list of everything his platform has screwed up, from fake news and foreign meddling in the 2016 election to hate speech and data privacy. “We didn’t take a broad enough view of our responsibility,” he confessed. Then he added the words that everyone was waiting for: “I’m sorry.”

There have always been outsiders who criticized the tech industry — even if their concerns have been drowned out by the oohs and aahs of consumers, investors, and journalists. But today, the most dire warnings are coming from the heart of Silicon Valley itself. The man who oversaw the creation of the original iPhone believes the device he helped build is too addictive. The inventor of the World Wide Web fears his creation is being “weaponized.” Even Sean Parker, Facebook’s first president, has blasted social media as a dangerous form of psychological manipulation. “God only knows what it’s doing to our children’s brains,” he lamented recently.

To understand what went wrong — how the Silicon Valley dream of building a networked utopia turned into a globalized strip-mall casino overrun by pop-up ads and cyberbullies and Vladimir Putin — we spoke to more than a dozen architects of our digital present. If the tech industry likes to assume the trappings of a religion, complete with a quasi-messianic story of progress, the Church of Tech is now giving rise to a new sect of apostates, feverishly confessing their own sins. And the internet’s original sin, as these programmers and investors and CEOs make clear, was its business model.

To keep the internet free — while becoming richer, faster, than anyone in history — the technological elite needed something to attract billions of users to the ads they were selling. And that something, it turns out, was outrage. As Jaron Lanier, a pioneer in virtual reality, points out, anger is the emotion most effective at driving “engagement” — which also makes it, in a market for attention, the most profitable one. By creating a self-perpetuating loop of shock and recrimination, social media further polarized what had already seemed, during the Obama years, an impossibly and irredeemably polarized country.

The advertising model of the internet was different from anything that came before. Whatever you might say about broadcast advertising, it drew you into a kind of community, even if it was a community of consumers. The culture of the social-media era, by contrast, doesn’t draw you anywhere. It meets you exactly where you are, with your preferences and prejudices — at least as best as an algorithm can intuit them. “Microtargeting” is nothing more than a fancy term for social atomization — a business logic that promises community while promoting its opposite.

Why, over the past year, has Silicon Valley begun to regret the foundational elements of its own success? The obvious answer is November 8, 2016. For all that he represented a contravention of its lofty ideals, Donald Trump was elected, in no small part, by the internet itself. Twitter served as his unprecedented direct-mail-style megaphone, Google helped pro-Trump forces target users most susceptible to crass Islamophobia, the digital clubhouses of Reddit and 4chan served as breeding grounds for the alt-right, and Facebook became the weapon of choice for Russian trolls and data-scrapers like Cambridge Analytica. Instead of producing a techno-utopia, the internet suddenly seemed as much a threat to its creator class as it had previously been their herald.

What we’re left with are increasingly divided populations of resentful users, now joined in their collective outrage by Silicon Valley visionaries no longer in control of the platforms they built. The unregulated, quasi-autonomous, imperial scale of the big tech companies multiplies any rational fears about them — and also makes it harder to figure out an effective remedy. Could a subscription model reorient the internet’s incentives, valuing user experience over ad-driven outrage? Could smart regulations provide greater data security? Or should we break up these new monopolies entirely in the hope that fostering more competition would give consumers more options?

Silicon Valley, it turns out, won’t save the world. But those who built the internet have provided us with a clear and disturbing account of why everything went so wrong — how the technology they created has been used to undermine the very aspects of a free society that made that technology possible in the first place. —Max Read and David Wallace-Wells

The Architects

(In order of appearance.)

Jaron Lanier, virtual-reality pioneer. Founded first company to sell VR goggles; worked at Atari and Microsoft.

Antonio García Martínez, ad-tech entrepreneur. Helped create Facebook’s ad machine.

Ellen Pao, former CEO of Reddit. Filed major gender-discrimination lawsuit against VC firm Kleiner Perkins.

Can Duruk, programmer and tech writer. Served as project lead at Uber.

Kate Losse, Facebook employee No. 51. Served as Mark Zuckerberg’s speechwriter.

Tristan Harris, product designer. Wrote internal Google presentation about addictive and unethical design.

Rich “Lowtax” Kyanka, entrepreneur who founded influential message board Something Awful.

Ethan Zuckerman, MIT media scholar. Invented the pop-up ad.

Dan McComas, former product chief at Reddit. Founded community-based platform Imzy.

Sandy Parakilas, product manager at Uber. Ran privacy compliance for Facebook apps.

Guillaume Chaslot, AI researcher. Helped develop YouTube’s algorithmic recommendation system.

Roger McNamee, VC investor. Introduced Mark Zuckerberg to Sheryl Sandberg.

Richard Stallman, MIT programmer. Created legendary software GNU and Emacs.

How It Went Wrong, in 15 Steps

Step 1

Start With Hippie Good Intentions …

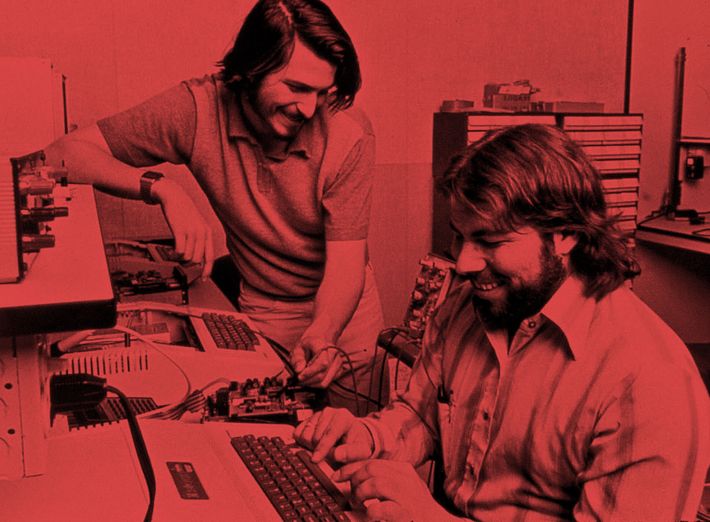

The Silicon Valley dream was born of the counterculture. A generation of computer programmers and designers flocked to the Bay Area’s tech scene in the 1970s and ’80s, embracing new technology as a tool to transform the world for good.

Jaron Lanier: If you go back to the origins of Silicon Valley culture, there were these big, traditional companies like IBM that seemed to be impenetrable fortresses. We had to create our own world. To us, we were the underdogs, and we had to struggle.

Antonio García Martínez: I’m old enough to remember the early days of the internet. A lot of the “Save the world” stuff comes from the origins in the Valley. The hippie flower children dropped out, and Silicon Valley was this alternative to mainstream industrial life. Steve Jobs would never have gotten a job at IBM. The original internet was built by super-geeky engineers who didn’t fully understand the commercial implications of it. They could muck around, and it didn’t matter.

Ellen Pao: I think two things are at the root of the present crisis. One was the idealistic view of the internet — the idea that this is the great place to share information and connect with like-minded people. The second part was the people who started these companies were very homogeneous. You had one set of experiences, one set of views, that drove all of the platforms on the internet. So the combination of this belief that the internet was a bright, positive place and the very similar people who all shared that view ended up creating platforms that were designed and oriented around free speech.

Can Duruk: Going to work for Facebook or Google was always this extreme moral positive. You were going to Facebook not to work but to make the world more open and connected. You go to Google to index the world’s knowledge and make it available. Or you go to Uber to make transportation affordable for everyone. A lot of people really bought into those ideals.

Kate Losse: My experience at Facebook was that there was this very moralistic sense of the mission: of connecting people, connecting the world. It’s hard to argue with that. What’s wrong with connecting people? Nothing, right?

Step 2

… Then mix in capitalism on steroids.

To transform the world, you first need to take it over. The planetary scale and power envisioned by Silicon Valley’s early hippies turned out to be as well suited for making money as they were for saving the world.

Lanier: We wanted everything to be free, because we were hippie socialists. But we also loved entrepreneurs, because we loved Steve Jobs. So you want to be both a socialist and a libertarian at the same time, which is absurd.

Tristan Harris: It’s less and less about the things that got many of the technologists I know into this industry in the first place, which was to create “bicycles for our mind,” as Steve Jobs used to say. It became this kind of puppet-master effect, where all of these products are puppet-mastering all these different users. That was really bad.

Lanier: We disrupted absolutely everything: politics, finance, education, media, family relationships, romantic relationships. We won — we just totally won. But having won, we have no sense of balance or modesty or graciousness. We’re still acting as if we’re in trouble and we have to defend ourselves. So we kind of turned into assholes, you know?

Rich Kyanka: Social media was supposed to be about, “Hey, Grandma. How are you?” Now it’s like, “Oh my God, did you see what she wore yesterday? What a fucking cow that bitch is.” Everything is toxic — and that has to do with the internet itself. It was founded to connect people all over the world. But now you can meet people all over the world and then murder them in virtual reality and rape their pets.

Step 3

The arrival of Wall Streeters didn’t help …

Just as Facebook became the first overnight social-media success, the stock market crashed, sending money-minded investors westward toward the tech industry. Before long, a handful of companies had created a virtual monopoly on digital life.

Pao: It all goes back to Facebook. It was a success so quickly, and was so admired, that it changed the culture. It went from “I’m going to improve people’s lives” to “I’m going to build this product that everybody uses so I can make a lot of money.” Then Google went public, and all of a sudden you have these instant billionaires. No longer did you have to toil for decades. So in 2008, when the markets crashed, all those people from Wall Street who were motivated by money ended up coming out to Silicon Valley and going into tech. That’s when values shifted even more. The early, unfounded optimism about good coming out of the internet ended up getting completely distorted in the 2000s, when you had these people coming in with a different set of goals.

García: I think Silicon Valley has changed. After a while, the whole thing became more sharp-elbowed. It wasn’t hippies showing up anymore. There was a lot more of the libertarian, screw-the-government ethos, that whole idea of move fast, break things, and damn the consequences. It still flies under this marketing shell of “making the world a better place.” But under the covers, it’s this almost sociopathic scene.

Ethan Zuckerman: Over the last decade, the social-media platforms have been working to make the web almost irrelevant. Facebook would, in many ways, prefer that we didn’t have the internet. They’d prefer that we had Facebook.

García: If email were being invented now and Mark Zuckerberg had concocted it, it would be a vertically integrated, proprietary thing that nobody could build on.

Step 4

… And we paid a high price for keeping it free.

To avoid charging for the internet — while becoming fabulously rich at the same time — Silicon Valley turned to digital advertising. But to sell ads that target individual users, you need to grow a big audience — and use advancing technology to gather reams of personal data that will enable you to reach them efficiently.

Kyanka: Around 2000, after the dot-com bust, people were trying as hard as they could to recoup money through ads. So they wanted more people on their platforms. They didn’t care if people were crappy. They didn’t care if the people were good. They just wanted more bodies with different IP addresses loading up ads.

Harris: There was pressure from venture capital to grow really, really quickly. There’s a graph showing how many years it took different companies to get to 100 million users. It used to take ten years, but now you can do it in six months. So if you’re competing with other start-ups for funding, it depends on your ability to grow usage very quickly. Everyone in the tech industry is in denial. We think we’re making the world more open and connected, when in fact the game is just: How do I drive lots of engagement?

Dan McComas: The incentive structure is simply growth at all costs. I can tell you that from the inside, the board never asks about revenue. They honestly don’t care, and they said as much. They’re only asking about growth. When I was at Reddit, there was never a conversation at any board meeting about the users, or things that were going on that were bad, or potential dangers.

Pao: Reddit, when I was there, was about growth at all costs.

McComas: The classic comment that would come up in every board meeting was “Why aren’t you growing faster?” We’d say, “Well, we’ve grown by 40 million visitors since the last board meeting.” And the response was “That’s slower than the internet is growing — that’s not enough. You have to grow more.” Ultimately, that’s why Ellen and I were let go.

Pao: When you look at how much money Facebook and Google and YouTube print every day, it’s all about building the user base. Building engagement was important, and they didn’t care about the nature of engagement. Or maybe they did, but in a bad way. The more people who got angry on those sites — Reddit especially — the more engagement you would get.

Harris: If you’re YouTube, you want people to register as many accounts as possible, uploading as many videos as possible, driving as many views to those videos as possible, so you can generate lots of activity that you can sell to advertisers. So whether or not the users are real human beings or Russian bots, whether or not the videos are real or conspiracy theories or disturbing content aimed at kids, you don’t really care. You’re just trying to drive engagement to the stuff and maximize all that activity. So everything stems from this engagement-based business model that incentivizes the most mindless things that harm the fabric of society.

Lanier: What started out as advertising morphed into continuous behavior modification on a mass basis, with everyone under surveillance by their devices and receiving calculated stimulus to modify them. It’s a horrible thing that was foreseen by science-fiction writers. It’s straight out of Philip K. Dick or 1984. And despite all the warnings, we just walked right into it and created mass behavior-modification regimes out of our digital networks. We did it out of this desire to be both cool socialists and cool libertarians at the same time.

Zuckerman: As soon as you’re saying “I need to put you under surveillance so I can figure out what you want and meet your needs better,” you really have to ask yourself the questions “Am I in the right business? Am I doing this the right way?”

Kyanka: It’s really sad, because you have hundreds and hundreds of bigwig, smarty-pants guys at all these analytic firms, and they’re trying to drill down into the numbers and figure out what kind of goods and products people want. But they only care about the metrics. They say, “Well, this person is really interested in AR-15s. He buys ammunition in bulk. He really likes Alex Jones. He likes surveying hotels for the best vantage points.” They look at that and they say, “Let’s serve him these ads. This is how we’re going to make our money.” And they don’t care beyond that.

Harris: We cannot afford the advertising business model. The price of free is actually too high. It is literally destroying our society, because it incentivizes automated systems that have these inherent flaws. Cambridge Analytica is the easiest way of explaining why that’s true. Because that wasn’t an abuse by a bad actor — that was the inherent platform. The problem with Facebook is Facebook.

Step 5

Everything was designed to be really, really addictive.

The social-media giants became “attention merchants,” bent on hooking users no mater the consequences. “Engagement” was the euphemism for the metric, but in practice it evolved into an unprecedented machine for behavior modification.

Sandy Parakilas: One of the core things going on is that they have incentives to get people to use their service as much as they possibly can, so that has driven them to create a product that is built to be addictive. Facebook is a fundamentally addictive product that is designed to capture as much of your attention as possible without any regard for the consequences. Tech addiction has a negative impact on your health and on your children’s health. It enables bad actors to do new bad things, from electoral meddling to sex trafficking. It increases narcissism and people’s desire to be famous on Instagram. And all of those consequences ladder up to the business model of getting people to use the product as much as possible through addictive, intentional-design tactics, and then monetizing their users’ attention through advertising.

Harris: I had friends who worked at Zynga, and it was the same thing. Not how do we build games that are great for people, or that people really love, but how do we manipulate people into spending money on and creating false social obligations so your friend will plant corn on your farm? Zynga was a weed that grew through Facebook.

Losse: In a way, Zynga was too successful at this. They were making so much money that they were hacking the whole concept of Facebook as a social platform. The problem with those games is they weren’t really that social. You put money into the game, and then you took care of your fish or your farm or whatever it was. You spent a lot of time on Facebook, and people were getting addicted to it.

Guillaume Chaslot: The way AI is designed will have a huge impact on the type of content you see. For instance, if the AI favors engagement, like on Facebook and YouTube, it will incentivize divisive content, because divisive content is very efficient to keep people online. If the metric you try to optimize is likes, or the little arcs on Facebook, then the type of content people will see and share will be very different.

Roger McNamee: If you parse what Unilever said about Facebook when they threatened to pull their ads, their message was “Guys, your platform’s too good. You’re basically harming our customers. Because you’re manipulating what they think. And more importantly, you’re manipulating what they feel. You’re causing so much outrage that they become addicted to outrage.” The dopamine you get from outrage is just so addictive.

Harris: That blue Facebook icon on your home screen is really good at creating unconscious habits that people have a hard time extinguishing. People don’t see the way that their minds are being manipulated by addiction. Facebook has become the largest civilization-scale mind-control machine that the world has ever seen.

Chaslot: Tristan was one of the first people to start talking about the problem of this kind of thinking.

Harris: I warned about it at Google at the very beginning of 2013. I made that famous slide deck that spread virally throughout the company to 20,000 people. It was called “A Call to Minimize Distraction & Respect Users’ Attention.” It said the tech industry is creating the largest political actor in the world, influencing a billion people’s attention and thoughts every day, and we have a moral responsibility to steer people’s thoughts ethically. It went all the way up to Larry Page, who had three separate meetings that day where people brought it up. And to Google’s credit, I didn’t get fired. I was supported to do research on the topic for three years. But at the end of the day, what are you going to do? Knock on YouTube’s door and say, “Hey, guys, reduce the amount of time people spend on YouTube. You’re interrupting people’s sleep and making them forget the rest of their life”? You can’t do that, because that’s their business model. So nobody at Google specifically said, “We can’t do this — it would eat into our business model.” It’s just that the incentive at a place like YouTube is specifically to keep people hooked.

Step 6

At first, it worked — almost too well.

None of the companies hid their plans or lied about how their money was made. But as users became deeply enmeshed in the increasingly addictive web of surveillance, the leading digital platforms became wildly popular.

Pao: There’s this idea that, “Yes, they can use this information to manipulate other people, but I’m not gonna fall for that, so I’m protected from being manipulated.” Slowly, over time, you become addicted to the interactions, so it’s hard to opt out. And they just keep taking more and more of your time and pushing more and more fake news. It becomes easy just to go about your life and assume that things are being taken care of.

McNamee: If you go back to the early days of propaganda theory, Edward Bernays had a hypothesis that to implant an idea and make it universally acceptable, you needed to have the same message appearing in every medium all the time for a really long period of time. The notion was it could only be done by a government. Then Facebook came along, and it had this ability to personalize for every single user. Instead of being a broadcast model, it was now 2.2 billion individualized channels. It was the most effective product ever created to revolve around human emotions.

García: If you pulled the plug on Facebook, there would literally be riots in the streets. So in the back of Facebook’s mind, they know that they’re stepping on people’s toes. But in the end, people are happy to have the product, so why not step on toes? This is where they just wade into the whole cesspit of human psychology. The algorithm, by default, placates you by shielding you from the things you don’t want to hear about. That, to me, is the scary part. The real problem isn’t Facebook — it’s humans.

McNamee: They’re basically trying to trigger fear and anger to get the outrage cycle going, because outrage is what makes you be more deeply engaged. You spend more time on the site and you share more stuff. Therefore, you’re going to be exposed to more ads, and that makes you more valuable. In 2008, when they put their first app on the iPhone, the whole ballgame changed. Suddenly Bernays’s dream of the universal platform reaching everybody through every medium at the same time was achieved by a single device. You marry the social triggers to personalized content on a device that most people check on their way to pee in the morning and as the last thing they do before they turn the light out at night. You literally have a persuasion engine unlike any created in history.

Step 7

No one from Silicon Valley was held accountable …

No one in the government — or, for that matter, in the tech industry’s user base — seemed interested in bringing such a wealthy, dynamic sector to heel.

García: The real issue is that people don’t assign moral agency to algorithms. When shit goes sideways, you want someone to fucking shake a finger at and scream at. But Facebook just says, “Don’t look at us. Look at this pile of code.” Somehow, the human sense of justice isn’t placated.

Parakilas: In terms of design, companies like Facebook and Twitter have not prioritized features that would protect people against the most malicious cases of abuse. That’s in part because they have no liability when something goes wrong. Section 230 of the Communications Decency Act of 1996, which was originally envisioned to protect free speech, effectively shields internet companies from the actions of third parties on their platforms. It enables them to not prioritize the features they need to build to protect users.

McNamee: In 2016, I started to see things that concerned me. First it was the Bernie memes, the way they were spreading. Then there was the way Facebook was potentially conferring a significant advantage to negative campaign messages in Brexit. Then there were the Russian hacks on our own election. But when I talked to Dan Rose, Facebook’s vice-president of partnerships, he basically throws the Section 230 thing at me: “Hey, we’re a platform, not a media company.” And I’m going, “You’ve got 1.7 billion users. If the government decides you’re a media company, it doesn’t matter what you assert. You’re messing with your brand here. I’m really worried that you’re gonna kill the company, and for what?” There is no way to justify what happened here. At best, it’s extreme carelessness.

Step 8

… Even as social networks became dangerous and toxic.

With companies scaling at unprecedented rates, user security took a backseat to growth and engagement. Resources went to selling ads, not protecting users from abuse.

Lanier: Every time there’s some movement like Black Lives Matter or #MeToo, you have this initial period where people feel like they’re on this magic-carpet ride. Social media is letting them reach people and organize faster than ever before. They’re thinking, Wow, Facebook and Twitter are these wonderful tools of democracy. But it turns out that the same data that creates a positive, constructive process like the Arab Spring can be used to irritate other groups. So every time you have a Black Lives Matter, social media responds by empowering neo-Nazis and racists in a way that hasn’t been seen in generations. The original good intention winds up empowering its opposite.

Parakilas: During my time at Facebook, I thought over and over again that they allocated resources in a way that implied they were almost entirely focused on growth and monetization at the expense of user protection. The way you can understand how a company thinks about what its key priorities are is by looking at where they allocate engineering resources. At Facebook, I was told repeatedly, “Oh, you know, we have to make sure that X, Y, or Z doesn’t happen.” But I had no engineers to do that, so I had to think creatively about how we could solve problems around abuse that was happening without any engineers. Whereas teams that were building features around advertising and user growth had a large number of engineers.

Chaslot: As an engineer at Google, I would see something weird and propose a solution to management. But just noticing the problem was hurting the business model. So they would say, “Okay, but is it really a problem?” They trust the structure. For instance, I saw this conspiracy theory that was spreading. It’s really large — I think the algorithm may have gone crazy. But I was told, “Don’t worry — we have the best people working on it. It should be fine.” Then they conclude that people are just stupid. They don’t want to believe that the problem might be due to the algorithm.

Parakilas: One time a developer who had access to Facebook’s data was accused of creating profiles of people without their consent, including children. But when we heard about it, we had no way of proving whether it had actually happened, because we had no visibility into the data once it left Facebook’s servers. So Facebook had policies against things like this, but it gave us no ability to see what developers were actually doing.

Kyanka: It’s important to have rules and to follow through on them. When I started my forum Something Awful, one of my first priorities was to create a very detailed, comprehensive list spelling out what is allowed and what is not allowed. The problem with Twitter is they never defined themselves. They’re trying to put up the false pretense that they’re banning the alt-right, but it’s just a metrics game. They don’t have anyone who can direct the community and communicate with the community, so they’re just revving their tires in the sand. They’re a bunch of math nerds who probably haven’t left their own business in years, so they have no idea how to actually speak to females or people with other viewpoints without going to wikiHow. They’ve got no future unless they can go to the person who is currently teaching Mark Zuckerberg how to be a human being.

McComas: Ultimately the problem Reddit has is the same as Twitter: By focusing on growth and growth only, and ignoring the problems, they amassed a large set of cultural norms on their platforms that stem from harassment or abuse or bad behavior. They have worked themselves into a position where they’re completely defensive and they can just never catch up on the problem. I don’t see any way it’s going to improve. The best they can do is figure out how to hide the bad behavior from the average user.

Step 9

… And even as they invaded our privacy.

The more features Facebook and other platforms added, the more data users willingly, if unwittingly, released to them and the data brokers who power digital advertising.

Richard Stallman: What is data privacy? That means that if a company collects data about you, it should somehow protect that data. But I don’t think that’s the issue. The problem is that these companies are collecting data about you, period. We shouldn’t let them do that. The data that is collected will be abused. That’s not an absolute certainty, but it’s a practical extreme likelihood, which is enough to make collection a problem.

Kyanka: No matter where you go, especially in this country, advertisers follow. And when advertisers follow, they begin thought groups, and then think tanks, and then they begin building up these gigantic structures dedicated to stealing as much data and seeing how far they can push boundaries before somebody starts pushing back.

Losse: I’m not surprised at what’s going on now with Cambridge Analytica and the scandal over the election. For long time, the accepted idea at Facebook was: Giving developers as much data as possible to make these products is good. But to think that, you also have to not think about the data implications for users. That’s just not your priority.

Pao: Nobody wants to take responsibility for the election based on how people were able to manipulate their platform. People knew they gave Facebook permission to share their information with developers, even if the average user didn’t understand the implications. I know I didn’t understand the implications — how much that information could be used to manipulate our emotions.

Stallman: I never tell stores who I am. I never let them know. I pay cash and only cash for that reason. I don’t care whether it’s a local store or Amazon — no one has a right to keep track of what I buy.

Step 10

Then came 2016.

The election of Donald Trump and the triumph of Brexit, two campaigns powered in large part by social media, demonstrated to tech insiders that connecting the world — at least via an advertising-surveillance scheme — doesn’t necessarily lead to that hippie utopia.

Lanier: A lot of the rhetoric of Silicon Valley that has a utopian ring about creating meaningful communities where everybody’s creative and people collaborate — I was one of the first authors on some of that rhetoric a long time ago. So it kind of stings for me to see it misused. I used to talk about how virtual reality could be a tool for empathy. Then I see Mark Zuckerberg talking about how VR could be a tool for empathy while being profoundly non-empathic — using VR to tour Puerto Rico after the hurricane. One has this feeling of having contributed to something that’s gone very wrong.

Kyanka: My experience with Reddit and my experience with 4chan, if you combine them together, is probably under eight minutes total. But both of them are inadvertently my fault, because I thought they were so terrible that I kicked them off of Something Awful. And then somehow they got worse.

Chaslot: I realized personally that things were going wrong in 2011, when I was working at Google. I was working on this YouTube recommendation algorithm, and I realized that the algorithm was always giving you the same type of content. For instance, if I give you a video of a cat and you watch it, the algorithm thinks, Oh, he must really like cats. That creates these feeder bubbles where people just see one type of information. But when I notified my managers at Google and proposed a solution that would give a user more control so he could get out of the feeder bubble, they realized that this type of algorithm would not be very beneficial for watch time. They didn’t want to push that, because the entire business model is based on watch time.

Harris: A lot of people feel enormously regretful about how this has turned out. I mean, who wants to be part of the system that is sending the world in really dangerous directions? I wasn’t personally responsible for it — I called out the problem early. But everybody in the industry knows we need to do things differently. That kind of conscience is weighing on everybody. The reason I’m losing sleep is I’m worried that the fabric of society will fall apart if we don’t correct these things soon enough. We’re talking about people’s lives.

McComas: I fundamentally believe that my time at Reddit made the world a worse place. That sucks. It sucks to have to say that about myself.

Step 11

Employees are starting to revolt.

Tech-industry executives aren’t likely to bite the hand that feeds them. But maybe their employees — the ones who signed up for the mission as much as the money — can rise up and make a change.

García: Some Facebook employees felt uncomfortable when they went back home over Thanksgiving, after the election. They suddenly had all these hard questions from their mothers, who were saying, “What the hell? What is Facebook doing?” Suddenly they were facing flak for what they thought was a supercool job.

Harris: There’s a massive demoralizing wave that is hitting Silicon Valley. It’s getting very hard for companies to attract and retain the best engineers and talent when they realize that the automated system they’ve built is causing havoc everywhere around the world. So if Facebook loses a big chunk of its workforce because people don’t want to be part of that perverse system anymore, that is a very powerful and very immediate lever to force them to change.

Duruk: I was at Uber when all the madness was happening there, and it did affect recruiting and hiring. I don’t think these companies are going to go down because they can’t attract the right talent. But there’s going to be a measurable impact. It has become less of a moral positive now — you go to Facebook to write some code and then you go home. They’re becoming just another company.

McNamee: For three months starting in October 2016, I appealed to Mark Zuckerberg and Sheryl Sandberg directly. I did it really politely. I didn’t talk to anybody outside Facebook. I did it as a friend of the firm. I said, “Guys, I think this election manipulation has all the makings of a crisis, and I think you need to get on top of it. You need to do forensics, and you need to determine what, if any, role you played. You need to make really substantive changes to your business model and your algorithms so the people know it’s not going to happen again. If you don’t do that, and it turns out you’ve had a large impact on the election, your brand is hosed.” But if you can’t convince Mark and Sheryl, then you have to convince the employees. One approach, which has never been tested in the tech world, is what I would characterize as the Ellsberg strategy: Somebody on the inside, like Daniel Ellsberg releasing the Pentagon Papers, says, “Oh my God, something horrible is going on here,” and the resulting external pressure forces a change. That’s the only way you have a snowball’s chance in hell of triggering what would actually be required to fix things and make it work.

Step 12

To fix it, we’ll need a new business model …

If the problem is in the way the Valley makes money, it’s going to have to make money a different way. Maybe by trying something radical and new — like charging users for goods and services.

Parakilas: They’re going to have to change their business model quite dramatically. They say they want to make time well spent the focus of their product, but they have no incentive to do that, nor have they created a metric by which they would measure that. But if Facebook charged a subscription instead of relying on advertising, then people would use it less and Facebook would still make money. It would be equally profitable and more beneficial to society. In fact, if you charged users a few dollars a month, you would equal the revenue Facebook gets from advertising. It’s not inconceivable that a large percentage of their user base would be willing to pay a few dollars a month.

Lanier: At the time Facebook was founded, the prevailing belief was that in the future there wouldn’t be paid people making movies and television, because armies of unpaid volunteers, organized through our networks, would make superior content, just like happened with Wikipedia. But what actually happened is when people started paying for Netflix, we got what we call Peak TV. Things got much better as a result of it being monetized to subscribers. So if we outlawed advertising and asked people to pay directly for things like Facebook, the only customer would be the user. There would no longer be third parties paying to influence you. We would also pay people who opt to provide their data, which would become a source of economic growth. In that situation, I think we would have a chance of achieving Peak Social Media. We might actually see things improve a great deal.

Step 13

… And some tough regulation.

While we’re at it, where has the government been in all this?

Stallman: We need a law. Fuck them — there’s no reason we should let them exist if the price is knowing everything about us. Let them disappear. They’re not important — our human rights are important. No company is so important that its existence justifies setting up a police state. And a police state is what we’re heading toward.

Duruk: The biggest existential problem for them would be regulation. Because it’s clear that nothing else will stop these companies from using their size and their technology to just keep growing. Without regulation, we’ll basically just be complaining constantly, and not much will change.

McNamee: Three things. First, there needs to be a law against bots and trolls impersonating other people. I’m not saying no bots. I’m just saying bots have to be really clearly marked. Second, there have to be strict age limits to protect children. And third, there has to be genuine liability for platforms when their algorithms fail. If Google can’t block the obviously phony story that the kids in Parkland were actors, they need to be held accountable.

Stallman: We need a law that requires every system to be designed in a way that achieves its basic goal with the least possible collection of data. Let’s say you want to ride in a car and pay for the ride. That doesn’t fundamentally require knowing who you are. So services which do that must be required by law to give you the option of paying cash, or using some other anonymous-payment system, without being identified. They should also have ways you can call for a ride without identifying yourself, without having to use a cell phone. Companies that won’t go along with this — well, they’re welcome to go out of business. Good riddance.

Step 14

Maybe nothing will change.

The scariest possibility is that nothing can be done — that the behemoths of the new internet are too rich, too powerful, and too addictive for anyone to fix.

McComas: I don’t think the existing platforms are going to change.

García: Look, I mean, advertising sucks, sure. But as the ad tech guys say, “We’re the people who pay for the internet.” It’s hard to imagine a different business model other than advertising for any consumer internet app that depends on network effects.

Pao: You have these people who have developed these wide-ranging problems, but they haven’t used their skill and their innovation and their giant teams and their huge coffers to solve them. What makes you think they’re going to change and care about these problems today?

Losse: I don’t see Facebook’s business being profoundly affected by this. It doesn’t matter what people say — they’ve come to consider the infrastructure as necessary to their lives, and they’re not leaving it.

Step 15

… Unless, at the very least, some new people are in charge.

If Silicon Valley’s problems are a result of bad decision-making, it might be time to look for better decision-makers. One place to start would be outside the homogeneous group currently in power.

Losse: When I was writing speeches for Mark Zuckerberg, I spent a lot of time thinking about what the pushback would be on his vision. For me, the obvious criticism is: Why should people give up their power to this bigger force, even if it’s doing all these positive things and connecting them to their friends? That was the hardest thing for Zuckerberg to get his head around, because he has been in control of this thing for so long, from the very beginning. If you’re Mark, you’d be thinking, What do they want? I did all this. I am in control of it. But it does seem like the combined weight of the criticism is starting to wear him thin.

Pao: I’ve urged Facebook to bring in people who are not part of a homogeneous majority to their executive team, to every product team, to every strategy discussion. The people who are there now clearly don’t understand the impact of their platforms and the nature of the problem. You need people who are living the problem to clarify the extent of it and help solve it.

Losse: At some point, they’re going to have to understand the full force of the critique they’re facing and respond to it directly.

With Regrets

“The web that many connected to years ago is not what new users will find today. The fact that power is concentrated among so few companies has made it possible to weaponize the web at scale.” —Tim Berners-Lee, creator of the World Wide Web

“We really believed in social experiences. We really believed in protecting privacy. But we were way too idealistic. We did not think enough about the abuse cases.” —Sheryl Sandberg, chief operating officer at Facebook

“Let’s build a comprehensive database of highly personal targeting info and sell secret ads with zero public scrutiny. What could go wrong?” —Pierre Omidyar, founder of eBay

“I don’t have a kid, but I have a nephew that I put some boundaries on. There are some things that I won’t allow; I don’t want them on a social network.” —Tim Cook, CEO of Apple

“It’s a social-validation feedback loop … exactly the kind of thing that a hacker like myself would come up with, because you’re exploiting a vulnerability in human psychology. The inventors, creators — it’s me, it’s Mark [Zuckerberg], it’s Kevin Systrom on Instagram, it’s all of these people — understood this consciously. And we did it anyway.” —Sean Parker, first president of Facebook

“I wake up in cold sweats every so often thinking, What did we bring to the world?” —Tony Fadell, Known as one of the“fathers of the iPod”

“The short-term, dopamine-driven feedback loops that we have created are destroying how society works. No civil discourse, no cooperation; misinformation, mistruth. This is not about Russians’ ads. This is a global problem.” —Chamath Palihapitiya, former VP of user growth at Facebook

“The government is going to have to be involved. You do it exactly the same way you regulated the cigarette industry. Technology has addictive qualities that we have to address, and product designers are working to make those products more addictive. We need to rein that back.” —Marc Benioff, CEO of Salesforce

Things That Ruined the Internet

Cookies (1994)

The original surveillance tool of the internet. Developed by programmer Lou Montulli to eliminate the need for repeated log-ins, cookies also enabled third parties like Google to track users across the web. The risk of abuse was low, Montulli thought, because only a “large, publicly visible company” would have the capacity to make use of such data. The result: digital ads that follow you wherever you go online.

The Farmville vulnerability (2007)

When Facebook opened up its social network to third-party developers, enabling them to build apps that users could share with their friends, it inadvertently opened the door a bit too wide. By tapping into user accounts, developers could download a wealth of personal data — which is exactly what a political-consulting firm called Cambridge Analytica did to 87 million Americans.

Algorithmic sorting (2006)

It’s how the internet serves up what it thinks you want — automated calculations based on dozens of hidden metrics. Facebook’s News Feed uses it every time you hit refresh, and so does YouTube. It’s highly addictive — and it keeps users walled off in their own personalized loops. “When social media is designed primarily for engagement,” tweets Guillaume Chaslot, the engineer who designed YouTube’s algorithm, “it is not surprising that it hurts democracy and free speech.”

The “like” button (2009)

Initially known as the “awesome” button, the icon was designed to unleash a wave of positivity online. But its addictive properties became so troubling that one of its creators, Leah Pearlman, has since renounced it. “Do you know that episode of Black Mirror where everyone is obsessed with likes?” she told Vice last year. “I suddenly felt terrified of becoming those people — as well as thinking I’d created that environment for everyone else.”

Pull-to-refresh (2009)

Developed by software developer Loren Brichter for an iPhone app, the simple gesture — scrolling downward at the top of a feed to fetch more data — has become an endless, involuntary tic. “Pull-to-refresh is addictive,” Brichter told The Guardian last year. “I regret the downsides.”

Pop-up ads (1996)

While working at an early blogging platform, Ethan Zuckerman came up with the now-ubiquitous tool for separating ads from content that advertisers might find objectionable. “I really did not mean to break the internet,” he told the podcast Reply All. “I really did not mean to bring this horrible thing into people’s lives. I really am extremely sorry about this.”

—Brian Feldman

*This article appears in the April 16, 2018, issue of New York Magazine. Subscribe Now!