My trip into the upside-down world of Amazon reviews of cheapo electronics began because of a lost dongle. I was on an early morning flight with two chatty dudes behind me, bonding over living in Brooklyn and working in consulting. I badly wanted to drown out the conversation, but my wireless headphones were dead and I couldn’t find the 3.5 mm-to-Lightning dongle that’d let me plug my earbuds in. Luckily, I am a galaxy-brain genius, and came up with the brilliant solution to avoid this in the future: I would just buy a bunch of dongles.

It wasn’t hard finding cheap dongle deals on Amazon. The problem was the huge variance in the reviews — often for ostensibly the exact same product. It’s an issue I’d noticed plenty of times while hunting for cheap electronics on Amazon, but I couldn’t stop trying to figure out the rhyme or reason behind it all.

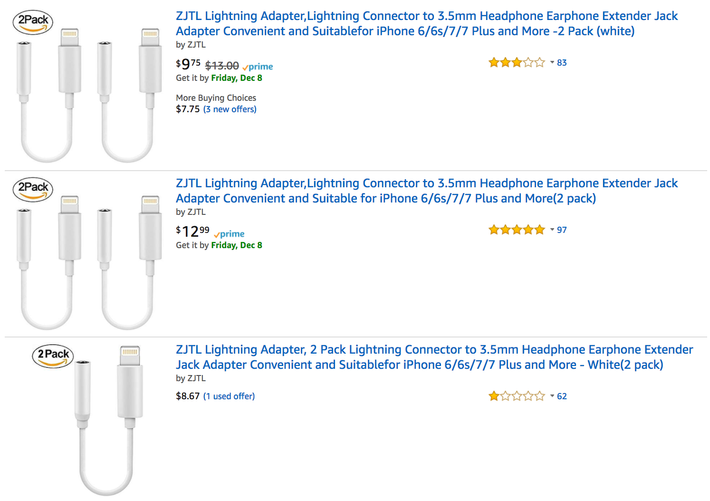

Here, for instance, are three separate listings for two packs of ZJTL dongles, all for different prices, all with wildly differing reviews:

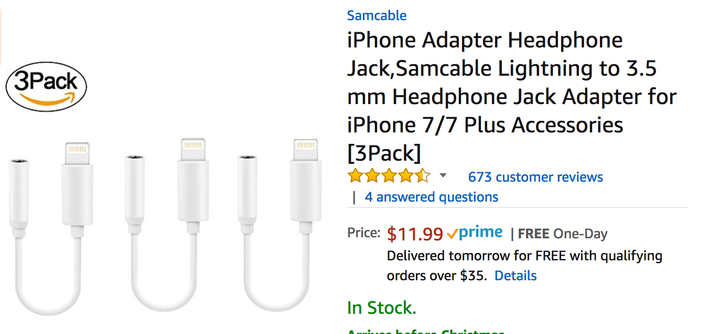

Or here’s two listings for a three-pack of Samcable dongles. Here is one, with 673 customer reviews and an average of 4.6 stars:

Here is, as near as I can tell, the exact same cables, for about a dollar more, but with 76 customer reviews and an average of 1.1 star:

So what’s going on here? Scanning the listing for the highly reviewed three-pack, I found there wasn’t much of a range — they were either wonderful or terrible. Of the 673 reviews, 654 were five-star reviews, while the remaining 19 were one-star reviews. And those five-star reviews had some quirks.

I couldn’t find a single five-star review with a “Verified Purchase” tag confirming that the reviewer had bought the product. Meanwhile, every single one of the 19 one-star reviews for the Samcable dongles were verified purchases, and all had the same basic complaint: “Doesn’t work.” Here’s a taste of what you’d see from one-star reviews:

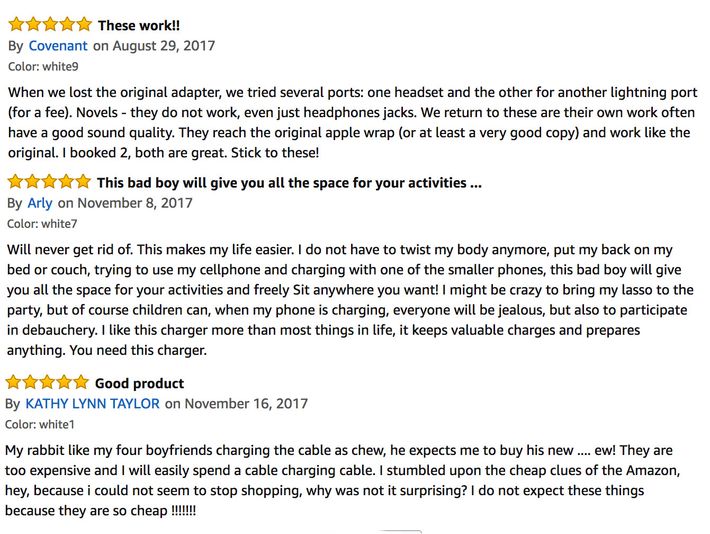

Here, on the other hand, is what the five-star reviews look like:

Not only are the reviews syntactically bizarre, many of them appear to be written about phone chargers, not headphone dongles. This latter problem Amazon attributed to a technical error — different color options for the product were actually different products entirely — but if it’s an error, it’s a widespread one. As of this writing, these two-in-one Lightning splitter from eurHpandray had much the same problem: hundreds of five-star reviews, none of them verified purchases, with many listing different “color” options that are also seemingly for different products. In effect, this means that anyone who doesn’t spend inordinate amounts of time examining reviews would come away with the impression that the individual product had hundreds of positive reviews.

At this point, I was deeply leery of buying any dongle off of Amazon. But I was much more curious about the reviews themselves. Clearly, these reviews were unreliable. But how did they get there? And was there a way to sort out the sham reviews from honest feedback?

I turned to two sites, Fakespot and ReviewMeta, which use publicly available review metadata and algorithms to try to suss out which reviews are “unreliable” or “unnatural.” The Samcable listing with 673 total reviews got a grade of F from Fakespot, with it declaring that 100 percent of the reviews were “low-quality.” After discarding what its algorithm determined were untrustworthy reviews, it adjusted that 4.6 average rating down to a 0.0 rating. ReviewMeta wasn’t much kinder. The highly rated Samcables went from an average review score of 4.6 to 1.1 using ReviewMeta’s criteria, deeming only 81 reviews out of the 673 not unnatural in some way.

Ming Ooi, one of the co-founders of Fakespot, is blunt in his assessment of Amazon’s reviews ecosystem. “About 40 percent of reviews we see on Amazon are unreliable,” he says — though his site is only checking reviews that people are taking the time to examine on Fakespot, which likely skews the results. (When I asked about the weird reviews where different color options for the product were actually different products entirely under the same listing, he confirmed that it was a “newish trend we are starting to see.”)

Both Ooi and Tommy Noonan, founder of ReviewMeta, pointed out that I was likely seeing the extreme end of the spectrum because I was looking for cheap electronic commodities, where reviews can be make or break for would-be sellers. But ultimately, this is an effect of Amazon’s crowded marketplace. Tommy Noonan says that many sellers may be forced to turn to ginning up reviews somehow. “They’re caught in a Catch-22. You have to get reviews to sell, but to get reviews you need to sell products,” says Noonan.

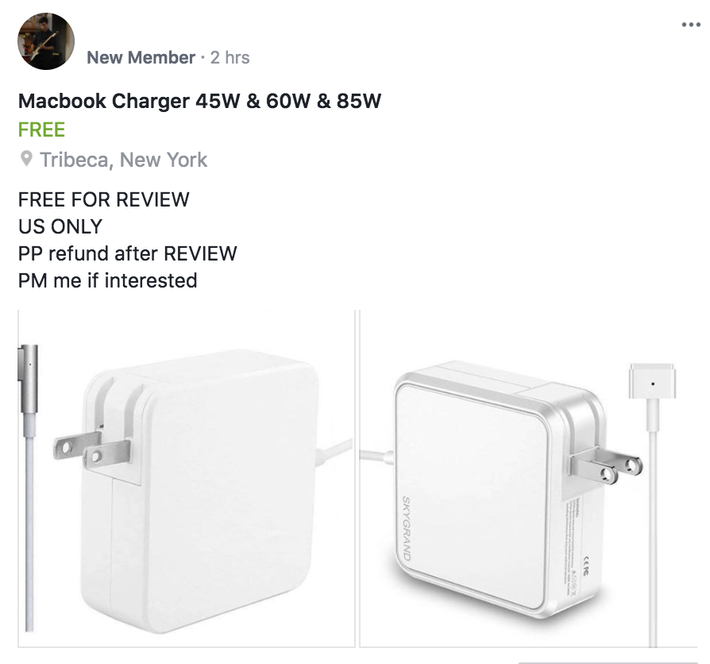

What’s slightly ironic about all of this is that Amazon made a major push at the end of last year to clean up its reviews system. Until October of 2016, Amazon reviews were glutted with reviews from what was informally known as Amazon review clubs. The review clubs worked like this: You’d sign up, get a free or heavily discounted product, whether it was an egg-cracker or a queen-size mattress, and in exchange you’d post a review. You just had to include words to the effect of “I received this product at a discount/for free in exchange for my honest review” somewhere in your review. It was an ideal ecosystem for many. Consumers got items on the cheap. Sellers got five-star reviews (the “honest” part was largely lip service; Ooi of Fakespot says anyone who didn’t post five-star reviews would quickly find themselves iced out of the review club). The person running the review club could charge sellers for access to customers.

In September of 2016, Noonan made a video showing that reviews with the “free or discounted” disclaimer in the text had much higher scores than reviews that didn’t. The video landed on the front page of Reddit. Within a few weeks of the video going viral, Amazon eliminated the ability for sellers to incentivize reviews through offering discounted or free products.

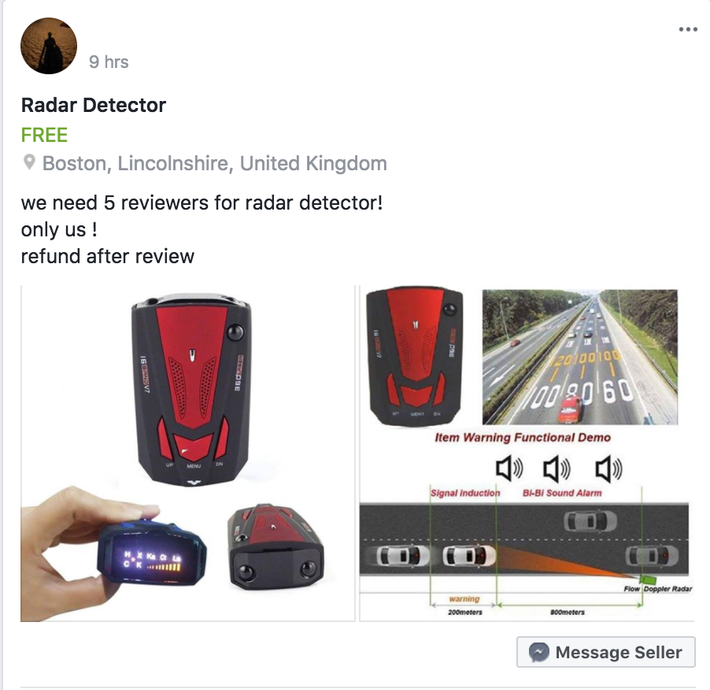

But this mostly just drove review clubs underground. I was able to quickly find and join several closed groups on Facebook that served as de facto Amazon review clubs. Each day, I found hundreds of posts with various sellers offering either free or deeply discounted items for U.S.-based buyers if they promised to review the item, with refunds then issued via PayPal. (Amusingly, there is currently scandal rocking the underground review clubs: People are scamming would-be sellers by faking five-star reviews and collecting PayPal refunds for reviews they did not leave.)

And it doesn’t even need to be as complicated as that. A video went viral earlier this year purporting to show a click farm in China, with thousands of phones dedicated to inflating the scores of mobile apps. Ooi says similar operations exist, based mainly in China and Eastern Europe, to do the same for Amazon reviews.

Amazon, for its part, says that it’s actively adjusting its algorithms to fight sham reviews. The company says it uses a combination of human moderation and machine learning to combat fake reviews, though declined to say how many actual human moderators are involved in the effort. It suggests that sellers who want reviews look to the Amazon Vine program, which offers products to trusted reviewers. There’s also the Amazon Early Reviewer program, a service Amazon offers sellers who need to get reviews from customers — Amazon will offer customers who purchase a product a small gift card from Amazon if they choose to review a product (whether that review is one star or five stars doesn’t matter). But both of these programs are geared toward higher-end or more established sellers; on message boards dedicated to selling on Amazon, many complain bitterly about the difficulty of getting into the program or its overall inefficacy compared to other methods.

To my eyes, Amazon could make its reviews more reliable in several ways. For one easy thing, it could weight reviews from verified purchases more heavily in its system — if every positive review of a product isn’t a verified purchase, while every negative review is a verified purchase, that should have a much larger impact on the displayed average score. Amazon says it’s reducing the number of unverified-purchase reviews an individual account can leave per week; this is a helpful step forward, but more can and should be done.

It could also provide more information about reviewers directly on the review page: You can currently click into some reviewers’ pages and see what else they’ve reviewed — unsurprisingly, many people giving suspicious five-star ratings to cheap electronics tend to rate exactly two items as five stars and then never review anything again, or have many reviews, but choose to keep them all hidden. There are real privacy concerns here, but other services like Yelp and TripAdvisor have figured out ways to provide useful metrics about how much to trust an individual reviewer — these same metrics would only help a confused Amazon shopper.

The most clear-eyed insight about the current state of Amazon reviews came from Pat Lum, who currently runs Wyatt Deals, which sells items on deep discounts in order to juice sales, which in turn helps sellers rank higher in Amazon’s internal search engine — an entirely different and equally fierce battleground for sellers. Before he ran Wyatt Deals, however, he co-founded HonestFew, an Amazon review club that was made obsolete after Amazon declared incentivized reviews dead last October.

Lum, a genial Canadian, offered this assessment: “The products Amazon shows you are the products that are most profitable for Amazon as a company.” If Amazon were to suddenly do a massive sweep of existing reviews as aggressive as what Fakespot or ReviewMeta might do, you’d suddenly see a lot more products with far fewer reviews, and a lot of customers suddenly uncertain about what exactly to buy.

In a statement to Select All, Amazon said: “Amazon is investing heavily to detect and prevent inauthentic reviews. These reviews make up a tiny percentage of all reviews on Amazon but even one is unacceptable. In addition to advance detection, we’ve filed lawsuits against more than 1,000 defendants for reviews abuse and will continue to pursue legal action against the root cause of reviews abuse as well as the number of individuals and organizations who supply fraudulent reviews in exchange for compensation. Customer reviews are one of the most valuable tools we offer for making informed purchase decisions and we work hard to make sure they are doing their job.”

So what are you, the average Amazon shopper, to do? You can avail yourself of sites like Fakespot and ReviewMeta. Both readily admit that their algorithms aren’t perfect, but they do help spotlight products with “too good to be true” reviews attached to them. You can read reviews yourself, and check for things like a ton of unverified five-star reviews — in my experience, a sure sign that something fishy is going on with a product’s review score. And you can click through and check out a reviewer’s history. If a reviewer only has a few reviews, or has hidden their review history, take their feedback with a grain of salt.

Still, the problem remained: How could I make sure I wasn’t caught stuck on an airplane again without a dongle for a pair of earbuds? After digging around further, I ended up settling on something a bit more mundane than buying a three-pack of dongles of dubious quality. My search led me to the (unfortunately named) Dongle Dangler, which lets me stick my Lightning dongle on my key chain.

Unable to help myself, I put the review in on Fakespot and ReviewMeta. Fakespot graded it a bit more gently than any of the Lightning dongles I’d looked at, knocking a few stars off but generally approving, and ReviewMeta was overall quite positive, discounting a few reviews but keeping its average rating of 4.8 stars intact. I hit buy, and it should be here by the end of the week. We’ll see if I end up leaving a review.